Below are some interface bandwidth tests on various systems/drives using hdparm -tT. With hdparm, my understanding is:

- cached reads: essentially the RAM bandwidth of the host CPU – should be roughly the same for all devices

- buffered disk reads: the read bandwidth of the disk interface. The Jeson speed divided by 4 is 388, and we got 300 on the i.MX8.

Note, the i.MX8 setup in this test had some hardware modifications to the PCI signals that were not compliant for routing the high-speed signals, so the performance may be degraded in this test. Will update this once we have the next rev.

Note, below is just a sample of one – results did vary some from run to run.

Observations:

- Jetson NVMe speed is almost as fast as the AMD workstation

- NVMe read bandwidth is more than 5 times more than SSD.

- the i.MX8 single-lane nVME speed is less than 1/4 the speed of the 4-lane systems.

- the eMMC on the i.MX8 is almost as fast as the NVMe (280 vs 300).

- SD is quite slow compared to eMMC (70/85 vs 280).

- SD speeds on Jetson and i.MX8 were similar (70 vs 85).

Summary of test results:

| Platform | Disk | Disk Read Bandwidth |

|---|---|---|

| AMD | NVMe | 1,847 MB/sec |

| AMD | SSD | 332 MB/sec |

| i.MX8 | SD | 85 MB/sec |

| i.MX8 | eMMC | 280 MB/sec |

| i.MX8 | NVMe | 300 MB/sec |

| Orin | SD | 70 MB/sec |

| Orin | NVMe | 1,554 MB/sec |

AMD Ryzen 3900X Workstation

NVMe Samsung SSD 970 EVO 500GB

/dev/nvme0n1:

Timing cached reads: 32046 MB in 1.99 seconds = 16081.96 MB/sec

Timing buffered disk reads: 5544 MB in 3.00 seconds = 1847.35 MB/sec

SSD

/dev/sda:

Timing cached reads: 31542 MB in 1.99 seconds = 15834.95 MB/sec

Timing buffered disk reads: 998 MB in 3.00 seconds = 332.61 MB/sec

i.MX8 QuadMax

eMMC

/dev/mmcblk0:

Timing cached reads: 1592 MB in 2.00 seconds = 795.83 MB/sec

Timing buffered disk reads: 842 MB in 3.01 seconds = 280.14 MB/sec

SD

/dev/mmcblk1:

Timing cached reads: 1620 MB in 2.00 seconds = 810.12 MB/sec

Timing buffered disk reads: 256 MB in 3.01 seconds = 85.06 MB/sec

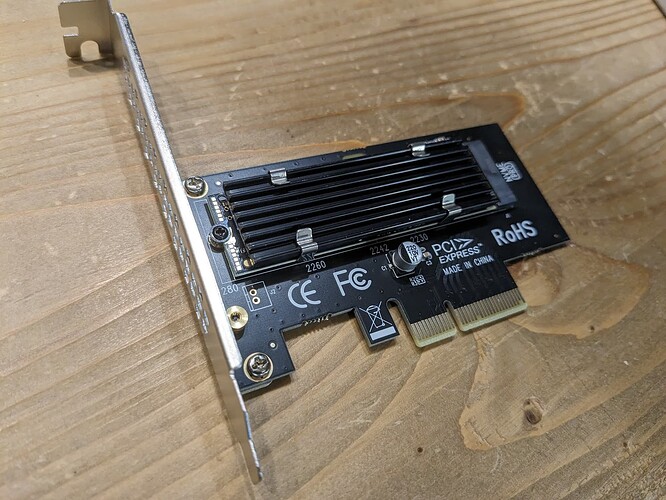

NVMe (1 lane) Samsung 970 EVOPlus 1TB

/dev/nvme0n1:

Timing cached reads: 1582 MB in 2.00 seconds = 790.66 MB/sec

Timing buffered disk reads: 900 MB in 3.00 seconds = 299.57 MB/sec

Jetson Orin Nano

SD

/dev/mmcblk1:

Timing cached reads: 6080 MB in 2.00 seconds = 3043.07 MB/sec

Timing buffered disk reads: 212 MB in 3.03 seconds = 70.04 MB/sec

NVMe (4 lane) Samsung 970 EVOPlus 1TB

/dev/nvme0n1p1:

Timing cached reads: 7836 MB in 2.00 seconds = 3922.64 MB/sec

Timing buffered disk reads: 4664 MB in 3.00 seconds = 1553.92 MB/sec