Mark Russinovich has stated on twitter that it’s time to stop using C and C++ for new projects. The following article has more information:

(we also recently discussed this here)

I think Mark’s Twitter posts are mostly click-bait material (what else is Twitter for?), but I posted the above article because it references several studies which indicate most security bugs are due to problems in C++. I searched around for the original studies (not ZDNet’s reinterpretations) and linked them below:

- /blog/2019/07/a-proactive-approach-to-more-secure-code/

- MSRC-Security-Research/presentations/2019_02_BlueHatIL/2019_01 - BlueHatIL - Trends, challenge, and shifts in software vulnerability mitigation.pdf at master · microsoft/MSRC-Security-Research · GitHub

- Chromium project: Memory Safety

I also have experience in fairly large C++ applications and can relate to the challenges of writing bug free C++ code:

C++ is still used extensively for embedded MCUs, native UIs (Qt), games, machine learning, and other performance intensive tasks. It is still the only option at times. There are other classes of applications that are becoming increasingly more common in Embedded Linux/IoT systems where you are are managing a number of different things and collecting a fair amount of data. These applications have two attributes:

- a number of different things happening concurrently

- a fair amount of data flowing between these concurrent processes

Writing these applications in C++ is challenging because both concurrency and memory management in C++ is difficult. It only takes one leak or invalid pointer to sink the entire thing, and dealing with these issues in the field is not fun. And concurrency compounds memory management issues – it is the intersection of these two factors that leads to an explosion in complexity, and complexity leads to bugs. C++ is still a reasonable choice for UIs as a UI is inherently single threaded because a user can only be doing one thing at a time. C++ is also still reasonable for MCU programming because even through you might have a RTOS with concurrent tasks, there is not a lot of data flowing around – the platform enforces this as MCUs don’t have a lot of memory compared to MPU platforms. The browser programming model is also single threaded and this is adequate because again, a user can only do one thing at a time. Programming in Javascript with a single thread can be limiting at times, but eliminating concurrency greatly simplifies programming. The following quote by Ryan Dahl, who wrote NodeJS, is interesting:

So, yeah, I think… when it first came out, I went around and gave a bunch of talks, trying to convince people that they should. That maybe we were doing I/O wrong and that maybe if we did everything in a non-blocking way, that we would solve a lot of the difficulties with programming. Like, perhaps we could forget about threads entirely and only use process abstractions and serialized communications. But within a single process, we could handle many, many requests by being completely asynchronous. I believe strongly in this idea at the time, but over the past couple of years, I think that’s probably not the end-all and be-all idea for programming. In particular, when Go came out.

We can also note that the popular Python and Ruby languages are also single threaded, which is fine if you are only doing one thing. But, the minute your need to do lots of different things at the same time, and pass data between these things, you are in a different game.

While there are still good uses for C/C++ and perhaps these concerns are overblown at times, but we should also be aware of where the industry is heading. Continuing to implement large, complex systems in C/C++ just because we’ve always done it this way, or this is the only SDK a vendor provides, is probably not a good strategy. But, neither is jumping on the Rust bandwagon just because it’s the industry darling right now.

Go is a pragmatic middle ground that gets you most of the way there, especially if your application involves a lot of “Internet programming” (IoT, edge, cloud, etc). Go is much easier to write than C++ or Rust, and in many cases almost as fast. It is a good choice for performant, secure, and reliable programs, and as a bonus, the developer experience is tops (easy to cross compile, deploy, etc). However, Go is boring, and does not fit into any of the neat little programming boxes that are common in industry. It’s not the fastest, safest, purest, … language, so it tends to be discarded. However, in many cases it may be the most pragmatic choice as it is a nice balance of all these factors.

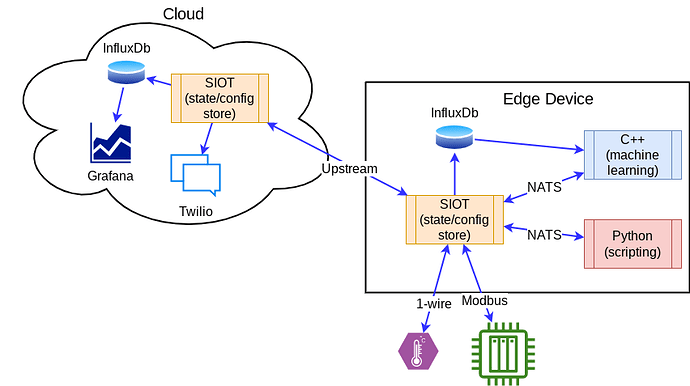

We also do not need to limit ourselves to one language – we can use multiple in a system. An event driven architecture using a message bus like NATS is efficient, and in the end is a really good way to implement complex systems that are flexible and easy to scale/change. Your state/communication/core logic can be in Go. Your UI can be written in C/C++ or Javascript. You can have machine learning algorithms running in Python/C++. But seriously consider writing the core of your system in something other than C++ – the part that is essential to keeping the system running, coordinating the many pieces, and reporting any problems to operators, wherever they may be. This is the architecture that Simple IoT embraces: