AI has a problem. A very large problem that will impede its deployment for the foreseeable future. But that didn’t seem to dampen the enthusiasm for AI that I saw at Computex. Yesterday I attended the AI forum that was part of this year’s Computex 2024 show in Taipei. There is a general sense among those who gave presentations and many attendees that I talked to that computing is at an inflection point. There’s talk of another industrial revolution coming. Although, as I’ll report below, I think AI has a massive reality check coming.

Since most of the hardware used to build AI systems is made in Taiwan naturally the emphasis at Computex was on hardware design and fabrication. For the hardware folks AI is just one big train barreling down the tracks. There was little talk about how AI would be used, what the downsides of AI are, social implications, moral implications. It’s just a modern version of the Oklahoma land rush at the moment, there’s so much money sloshing around but no profits today. History looks like it’s about to repeat (like it always does) the way things happened in the 1890’s (massive overbuilding of railroads), late 1990’s (massive overbuilding of fiber optic networks) and now a massive build-out of AI, with the result that 90% of the companies involved will be bankrupt within 5 years, just as what happened in the 1890’s and 1990’s.

Here’s what the stakeholders are saying. The first session in the morning began with Marc Hamilton of Nvidia giving a rising pep rally talk about Nvidia’s philosophy of IBTG (Infrastructure, Build, Train, Go) and talked about Nvidia’s NIM’s, or Nvidia Inference Microservices. Basically NIMs are just various cloud based AI models tuned for specific applications. The real star of the show was his showing off of the Blackwell GPU and explaining how it worked, and how multiple Blackwell ‘blades’ communicate within a rack. While he mentioned that Nvidia’s approach to integrating the Blackwell GPUs with their CPUs supposedly saved some power he didn’t seem to bat an eye when mentioning that a single rack with 72 Blackwell GPUs requires 100kW of power to run. Therein lies the single largest roadblock to wider deployment of AI “factories” (they don’t call them “data centers” anymore). There’s just not enough power to go around.

Other presenters talked about how they were using AI in their businesses. Google’s John Solomon did a lot of hand-waving about Google’s generic AI offerings. I think Google is seriously worried about how they are going to remain relevant once AI takes over their search functions.

Then there are the true believers such as Tom Anderson from Synopsys. They make software tools that help semiconductor companies design their chips better, as they have been for many years. He claims that in less than 5 years 70% of mobile applications will be created by AI, and that AI is the only way forward.

Xia Zhang from AWS did a lot of hand waving and didn’t give much information. Basically AWS is trying to figure out what to do with (as in how to monetize) massive amounts of data due to AI.

The afternoon sessions started off with Proveen Viadyanathan from Micron Technology. He talked a little bit about his favorite topic, HBM (high bandwidth memory) and showed some slides. But he did say one thing interesting, and I’m quoting him here: “AI is for accumulating knowledge, humans are for getting wisdom.” That definitely needs to be explored in depth much much more.

Eddie Ramirez from ARM was the first one to mention the elephant in the room when he stated there is not enough power generation on the planet to run all the proposed AI factories. So his pitch was how ARM was going to save AI by reducing power consumption in the CPU part of the GPU/CPU lash-up. Nvidia will probably be the biggest beneficiary of their efforts although Google claims to be using some ARM cpu’s in their data centers. For doing inferences in data centers, the claim is that ARM can deliver 2.5 to 3 times the tokens per watt verses X86 based systems. ARM has taken an interesting approach to solving the power problem though, and that is optimizing some CPU’s to improve inference performance for low end systems such that GPU’s would not be needed for such systems.

Rosalina Hiu from Seagate Technology explained some technical info concerning new ways to increase hard drive capacity. She made a good point about the need to preserve all of the data used to train an AI model to “preserve the source of truth”. Because the TCO of SSD drives for data centers is about 6X that of HD’s, data centers still store about 90% of their data on HD’s, so HD’s are not going away any time soon. Storage density keeps increasing, the cost per Gig is half that of SSD. And unlike AI, the power consumption of HD’s is actually decreasing. Their new 30TB drive supposedly consumes only 0.32w/TB.

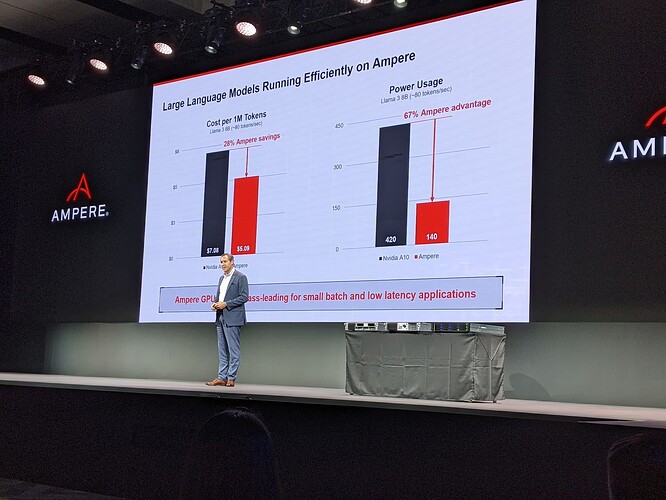

Jeff Wittich from Ampere again made the point that power consumption was the most challenging constraint on the expansion of AI today. If the present growth rate continues, by 2026 data centers world wide will consume 1050Tw/hrs. That’s more than the entire country of Japan. In 2022 data center power consumption was 460Tw/hr. Even if it could be generated the power grid can’t handle that much capacity increase. So after the doom and gloom speech we got the Ampere marketing presentation. They, like ARM, are attacking the power problem through development of processors that use less power than X86. Here is part of Jeff’s presentation:

The last presenter, K.S. Pua, from Phison, seemed to be the most realistic of the hardware presenters. He posed a question: Will generative AI be profitable in the mid-term? He thinks not. Obviously it’s not profitable now in the early term. He had concerns about models possibly being trained with sensitive (personal) information. For cloud based AI systems the costs to train models have no limits. For an edge based server right now training costs are too expensive. He thinks that unless edge based model training can become reasonably affordable (at least for businesses) that AI right now is heading for a “bubble”. So K’s goal is to create affordable hardware that’s powerful enough to do AI tasks. He says we should own all of our own data, train our own models, and especially run our own inference engines.

To summarize, his concerns are:

- Ownership of generative AI is unclear

- Great risk of sensitive data leaking

- Cloud systems not suitable for sensitive data users

- Permanent subscription fees

- Unpredictable long term costs

But if you own your own AI system and (marketing plug) use his software to help create your models, he claims the benefits are:

- You own your own AI

- You own your own data

- No leak of sensitive data

- Suitable for sensitive data users

- One time and predictable costs

While Nvidia is the clear front runner today in terms of hardware design for AI “factories” their future growth is likely to be stifled by lack of power needed to run them. It looks like widespread deployment of AI is headed for a rough patch.