In modern networked systems, there is often the debate how to partition the system.

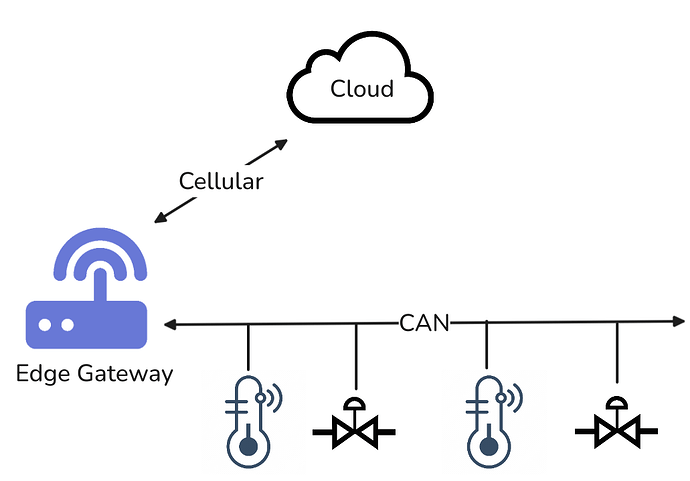

Below is one example where you have three levels: cloud, gateway, and IO (input/output) nodes.

All of these devices have a processor and can run software and do stuff.

So where do you do stuff? There are two basic philosophies:

- Push as much of the processing upstream to the cloud (where you have “infinite” resources) and never update downstream devices.

- Do as much at the Gateway and IO nodes as possible, make decisions when possible with local data, and only push processed data and events upstream. This requires routinely updating the downstream devices as needs change and better algorithms are developed.

Initially, approach #1 seems simpler and may be appropriate in some cases.

You can centralize logic and decisions as much as possible.

It is easy to update the system – you only have to update one central piece.

Until you need to scale, or there is a network disruption …

And have 50 IO nodes per site that are sending frequent unprocessed data all the way to the cloud, and decisions are coming back down, even when nothing is changing.

And then you eventually have 1000 sites.

And then there is a cloud outage and the system is in limbo until the cloud issue is fixed. All data during the outage is lost.

And someone damages the CAN bus cable and an actuator is left in the ON state and a tank overflows because no one is making decisions.

And you are storing Gigabytes of unprocessed historical data that you never use.

Distributed systems are hard, but in the end they scale and are more resilient, especially when you are forced to be distributed by device locations. Within reason, the more processing you push to the edge, the better. “Distributed” in these systems is not an architectural choice, but is forced on us by topology. Why not leverage this since we need to deal with it anyway.

We can compare this to human systems. How well do autocratic, centralized organizations work? Where someone at the top makes all the decisions and the rest are minions?

They may work OK at a small scale, but even that is questionable.

A student of history soon observes that successful organizations push responsibility down. People at the top provide oversight but are only involved in local decision-making when coordination is required. Trusted people feed necessary information up as required.

Is it any different with hierarchical, distributed digital systems?